Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | 31 |

Tags

- Speculative Execution

- backfill

- Spark Caching

- Spark SQL

- CI/CD

- mysql

- Spark Partitioning

- Salting

- etl

- off heap memory

- 빅데이터

- Kubernetes

- Kafka

- disk spill

- topic

- 데이터 파이프라인

- Docker

- redshift

- KDT_TIL

- colab

- Spark

- AQE

- Airflow

- k8s

- SQL

- DataFrame Hint

- Spark 실습

- Dag

- spark executor memory

- aws

Archives

- Today

- Total

JUST DO IT!

Spark에서 데이터 Caching 하는 방법, 실습해보기! - TIL230725 본문

📚 KDT WEEK 17 DAY 2 TIL

- Caching 이론 및 실습

- Caching BestPractices

🎟 Caching

자주 사용되는 데이터프레임을 메모리에 유지하여 처리속도를 증가시키는 방법

하지만 메모리 소비를 증가시키므로 불필요하게 모든 걸 캐싱할 필요는 없다.

DataFrame을 Caching하는 방법

- cache()와 persist()를 사용하면 가능하고, 메모리나 디스크에 저장하게 된다.

- 모두 lazy execution에 해당하여 필요하기 전까지는 캐싱하지 않는다.

- caching은 항상 파티션 단위로 메모리에 보존되므로, 하나의 파티션이 부분적으로 캐싱되지는 않는다.

실습

1. .cache() 사용해서 데이터프레임 캐싱하기

# SparkSession available as 'spark'

# 1~99999의 숫자가 저장되어 각 제곱의 컬럼(square)을 가진 df생성 >> df10_square

df = spark.range(1, 100000).toDF("id")

df10 = df.repartition(10)

from pyspark.sql.functions import expr

df10_square = df10.withColumn("square", expr("id*id"))

# caching

df10_square.cache() # 실제로 바로 캐싱되지는 않음

df10_square.take(10) # 10개의 데이터를 가져오기, 여기서 실제로 필요한 만큼 캐싱됨!

df10_square.count() # 모든 데이터가 연산에 필요하므로 여기서 모든 데이터가 캐싱됨!

df10_square.unpersist() # 캐싱해제, 이건 바로 적용됨

.take(10)의 경우 10개의 데이터만 캐싱되므로 모든 파티션이 캐싱되지 않고 하나만 캐싱되었고,

.count()의 경우 모든 데이터를 캐싱하므로 모든 파티션(10개)가 캐싱된 것을 확인할 수 있다.

2. SparkSQL 사용해서 쿼리로 캐싱하기

# Spark SQL 사용해서 캐싱해보기

df10_square.createOrReplaceTempView("df10_square")

spark.sql("CACHE TABLE df10_square") # SqarkSQL의 경우 바로 모든 데이터가 캐싱됨

# spark.sql("CACHE lazy TABLE df10_square") # lazy를 붙이면 필요할 때 캐싱하도록 할 수는 있음

spark.sql("UNCACHE TABLE df10_square") # 캐싱 해제

spark.catalog.isCached("df10_square") # df10_square가 캐싱된 상태인지 확인하는 명령어 > False

그렇다면 캐싱된 메모리를 사용하는 경우는 언제일까?

Spark Optimizer는 의미적으로 동일한 쿼리에 대해 완벽히 메모리에 있는 데이터로 대응하지는 못한다.

# 특정 조건으로 필터링된 결과를 캐싱

df10_squared_filtered = df10_square.select("id", "square").filter("id > 50000").cache()

df10_squared_filetered.count()

# 위와 순서만 다르고 의미적으로 같은 명령

df10_square.filter("id > 50000").select("id","square").count()

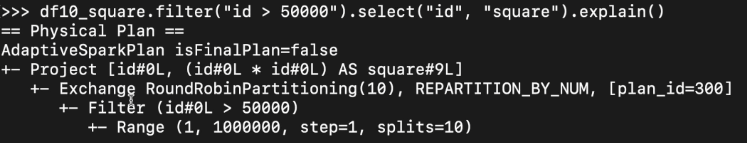

# Physical Plan 확인하는 명령어, Memory에서 가져왔다는 내용을 확인할 수 없다.

df10_square.filter("id > 50000").select("id","square").explain()

Memory에 있는 테이블을 사용하는 경우 InMemoryTableScan이라는 문구가 보인다.

만약 완전히 동일한 명령 (df10_square.select("id", "square").filter("id > 50000"))을 사용하거나, 캐싱된 변수(df10_squared_filtered)를 사용해서 어떤 명령을 한다면, 이때는 캐싱된 데이터를 사용하게 된다.

3. Persist 사용해서 캐싱하기

import pyspark

# .persist(pyspark.StorageLevel(인자))를 통해 persist의 옵션 조절

# cache와 마찬가지로 바로 캐싱되지는 않고, 사용될 때 캐싱

df_persisted = df10.withColumn("suqare", expr("id*id")).persist(

pyspark.StorageLevel(False, True, False, True, 1)

)

pyspark.StorageLevel의 인자의 의미는 다음의 순서로 의미한다.

- useDisk : 디스크 저장여부

- useMemory : 메모리 저장여부

- useOffHeap : OffHeap 메모리 저장여부 (Off Heap 설정필요)

- deserialized : 데이터 Serialization 여부, True시 메모리 사용증가 + CPU 계산 감소 / True는 메모리에서만 가능

- replication : 몇 개의 복사본을 서로 다른 executor에 저장할지 결정

🏆 Caching Best Practices

- 캐싱된 데이터를 사용할 때는 캐싱된 변수를 사용함으로써 분명하게 재사용하기

- 컬럼이 많은 데이터셋은 필요한 컬럼만 캐싱하기

- 불필요할 때는 uncache하기

- 때로는 매번 새로 계산하는 것이 캐싱보다 빠를수도 있다!

- > Parquet 포맷의 큰 데이터셋을 캐싱한 경우

- > 캐싱결과가 너무 커서 메모리에만 있을 수 없고, 디스크에도 저장된 경우 등등..

'TIL' 카테고리의 다른 글

| Spark Partitioning과 AQE 알아보고 실습하기 - TIL230725(2) (0) | 2023.07.25 |

|---|---|

| Spark 기능과 스케줄링, 메모리 구성 알아보기 - TIL230724 (0) | 2023.07.25 |

| 머신러닝을 위한 확률 기초 - TIL230719 (0) | 2023.07.24 |

| 머신 러닝을 위한 기초 선형 대수 알아보기 - TIL230718 (0) | 2023.07.20 |

| jupyter에서 머신러닝 End to End 실습해보기 - TIL230717 (0) | 2023.07.20 |