| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | 31 |

- 데이터 파이프라인

- SQL

- Spark SQL

- k8s

- KDT_TIL

- 빅데이터

- Airflow

- aws

- topic

- Spark Partitioning

- Spark

- AQE

- redshift

- etl

- spark executor memory

- Kubernetes

- Docker

- Speculative Execution

- backfill

- mysql

- DataFrame Hint

- Spark 실습

- Dag

- CI/CD

- off heap memory

- Salting

- Kafka

- colab

- Spark Caching

- disk spill

- Today

- Total

JUST DO IT!

MySQL ➡️ Redshift의 Airflow ETL 구현해보기 - TIL230609 본문

📚 KDT WEEK 10 DAY 4 TIL

- MySQL ➡️ Redshift Airflow ETL 구현

- 사전작업

- ETL 코드

- Backfill 구동

🟥 MySQL(OLTP)에서 Redshift(OLAP)로 Airflow ETL 구현해보기

프로덕션 데이터베이스(MySQL)에서 데이터 웨어하우스(Redshift)로 데이터를 전송하는 ETL을 Airflow로 구현해보자.

🛠️ 사전 작업

1. 권한 설정

먼저, 서로간의 권한 설정이 사전에 필요하다.

- Airflow DAG에서 S3 접근 권한 : AWS IAM User(S3 버킷 읽기, 쓰기 권한) 생성해서 access key, secret key 받기

- https://sunhokimdev.tistory.com/34 > Snowflake 실습 > Snowflake에서 AWS 접근하기 참고

- Redshift가 S3 접근 > Redshift에 S3를 접근할 수 있는 역할(Role)을 만들고 이를 Redshift에 지정

- https://sunhokimdev.tistory.com/32 > Redshift 벌크업데이트 + 실습 > IAM을 통해~ 참고

권한을 설정할 때는 S3FullAccess 권한이 필요하다.

S3FullAccess 권한이 위험하다 싶으면, 권한 생성 중 Create policy > JSON > "Resource" 에 사용할 S3 버킷만 할당하자.

2. Docker에 MySQL 세팅

Airflow Scheduler Docker Container에 root 유저로 로그인해서 실행한다.

docker ps # 해당 명령어로 airflow-scheduler의 CONTAINER ID를 찾는다.

docker exec --user root -it CONTAINERID sh # 찾은 CONTAINER ID를 넣어 루트 계정으로 Airflow 스케줄러 진입

sudo apt-get update #apt-get 업데이트

sudo apt-get install -y default-libmysqlclient-dev # MySQL 다운로드

sudo apt-get install -y gcc # MySQL에 필요한 C++ 컴파일러 다운로드

sudo pip3 install --ignore-installed "apache-airflow-providers-mysql" # airflow mysql 모듈 (재)설치

3. Airflow 세팅

다음으로, Airflow Connection 세팅이 필요하다.

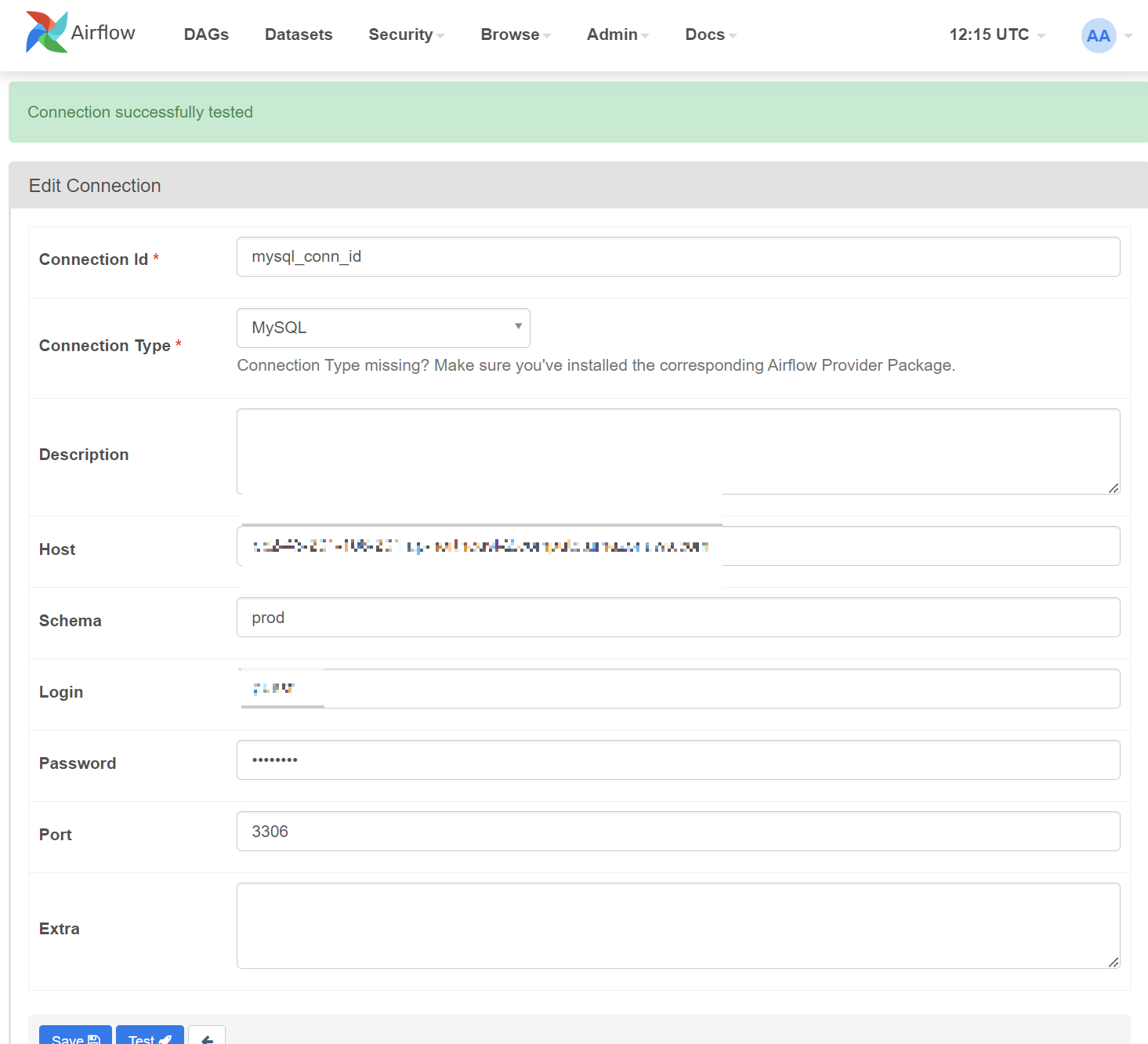

1) MySQL

Airflow 웹 UI > Admin > Connections에서 MySQL을 추가한다.

2) AWS S3

Airflow 웹 UI > Admin > Connections에서 Amazon Web Services을 추가한다.

IAM 유저 생성할 때 받은 Access Key와 Secret Acceess Key를 입력하면 된다.

4. 데이터 및 테이블 세팅

MySQL 세팅 정보(데이터 적재완료)

CREATE TABLE prod.nps(

id INT NOT NULL AUTO_INCREMENT primary key,

created_at timestamp,

score smallint

);

Redshift도 같은 필드와 데이터 타입으로 테이블을 생성해둔다.

이 테이블이 ETL을 통해 전송받은 데이터가 적재될 공간이 될 것이다.

⚙️ 사용할 기능

Airflow Operator

- SqlToS3Operator : MySQL의 SQL 결과를 S3에 적재하는 Operator

- S3ToRedshiftOperator : S3의 데이터를 Redshift 테이블에 적재하는 Operator (COPY 커맨드 사용)

🧩 코드

ETL 코드(Full Refresh)

from airflow import DAG

from airflow.operators.python import PythonOperator

from airflow.providers.amazon.aws.transfers.sql_to_s3 import SqlToS3Operator

from airflow.providers.amazon.aws.transfers.s3_to_redshift import S3ToRedshiftOperator

from airflow.models import Variable

from datetime import datetime

from datetime import timedelta

import requests

import logging

import psycopg2

import json

dag = DAG(

dag_id = 'MySQL_to_Redshift',

start_date = datetime(2022,8,24), # 날짜가 미래인 경우 실행이 안됨

schedule = '0 9 * * *', # 매일 9시마다

max_active_runs = 1, # 동시에 여러개의 DAG가 동작하지 않도록

catchup = False,

default_args = {

'retries': 1,

'retry_delay': timedelta(minutes=3),

}

)

schema = "sunhokim_public" # 적재될 Redshift 스키마이름

table = "nps" # 적재될 Redshift 테이블이름

s3_bucket = "grepp-data-engineering" # S3에서 가져올 데이터의 버킷 이름

s3_key = schema + "-" + table # 적재될 S3 PATH 정보

mysql_to_s3_nps = SqlToS3Operator(

task_id = 'mysql_to_s3_nps', # task_id 지정

query = "SELECT * FROM prod.nps", # MySQL의 prod.nps 테이블의 모든 정보

s3_bucket = s3_bucket,

s3_key = s3_key,

sql_conn_id = "mysql_conn_id", # Airflow 웹 UI에서 설정한 MySQL Connection ID

aws_conn_id = "aws_conn_id", # Airflow 웹 UI에서 설정한 S3 Connection ID

verify = False,

replace = True, # S3 PATH에 동일한 파일이 존재할 시 대체할 것인지 유무

pd_kwargs={"index": False, "header": False}, # index와 header 정보는 가져오지 않는다 (pandas 옵션)

dag = dag

)

s3_to_redshift_nps = S3ToRedshiftOperator(

task_id = 's3_to_redshift_nps', # task_id 지정

s3_bucket = s3_bucket,

s3_key = s3_key,

schema = schema,

table = table,

copy_options=['csv'], # S3의 소스데이터는 csv 파일

method = 'REPLACE', # Full Refresh > Redshift에 본래 존재하던 데이터는 완전히 대체

redshift_conn_id = "redshift_dev_db", # Airflow 웹 UI에서 설정한 Redshift Connection ID

aws_conn_id = "aws_conn_id",

dag = dag

)

mysql_to_s3_nps >> s3_to_redshift_npsS3ToRedshiftOperator에서 사용한 method의 방식에 따라 데이터 적재방식이 바뀐다.

method = 'REPLACE' 을 사용하면 테이블의 내용이 완전히 새로 교체된다.

REPLACE이외에도 UPSERT, APPEND가 존재하며, UPSERT의 경우 아래에서 다루었다.

ETL코드(Incremental Update)

위 Full Refresh 코드에서 변경된 함수만 가져왔다.

여기서 method = 'UPSERT' 방식을 사용한다.

mysql_to_s3_nps = SqlToS3Operator(

task_id = 'mysql_to_s3_nps',

# UPSERT 사용시 날짜에 필터되는 SQL문이 필요하다. Airflow의 execution_date를 사용한다.

# {{ execution_date }}는 Airflow가 execution_date에 해당하는 값으로 바꾸어 넣어준다.

query = "SELECT * FROM prod.nps WHERE DATE(created_at) = DATE('{{ execution_date }}')",

s3_bucket = s3_bucket,

s3_key = s3_key,

sql_conn_id = "mysql_conn_id",

aws_conn_id = "aws_conn_id",

verify = False,

replace = True,

pd_kwargs={"index": False, "header": False},

dag = dag

)

s3_to_redshift_nps = S3ToRedshiftOperator(

task_id = 's3_to_redshift_nps',

s3_bucket = s3_bucket,

s3_key = s3_key,

schema = schema,

table = table,

copy_options=['csv'],

redshift_conn_id = "redshift_dev_db",

aws_conn_id = "aws_conn_id",

method = "UPSERT", # UPSERT 방식 사용

upsert_keys = ["id"], # PK에 해당하는 필드를 넣어주면 된다. UPSERT시 중복 체크에 사용되는 필드이다.

dag = dag

)

mysql_to_s3_nps >> s3_to_redshift_npsUPSERT 방식에는 upsert_keys 값이 필요하다.

UPSERT는 중복된 데이터에 대해서는 Update, 새로운 데이터에 대해서는 Insert 동작처럼 데이터를 적재한다.

upsert_keys 값은 중복된 데이터를 가려낼 때 사용하는 Primary Key 필드를 받기 위한 필수 변수이다.

또한 Full Refresh 방식에는 Backfill이 필요 없어서 구현하지 않았지만,(그냥 다시 한번 실행하면 되니까)

Incremental Update에서는 Backfill을 위한 SQL 구문 변경이 필요하다. 여기서 execution_date를 활용한다.

SqlToS3Operator의 쿼리문을 확인해보면, 데이터 생성날짜가 execution_date에 해당하는 데이터만 가져오고 있다.

참고로 execution_date는 가져오고 싶은 데이터의 날짜(데이터가 생성된 날짜)라고 생각하면 쉽다.

Airflow는 execution_date를 알아서 처리하기 때문에,

만약에 Backfill을 실행했을 때 파이프라인 구동이 실패한 날짜를 자동으로 execution_date로 채워주거나, 사용자가 요청한 날짜로 채워주게된다.

이 파일들을 Airflow 폴더 > dags에 넣고 구동해보자.

🔙 Backfill 구동

아까 Incremental Update를 구현한 ETL 코드를 사용해서 Backfill이 정상적으로 동작하는지 확인해보자.

과거 2018년 7월 한달간의 데이터를 다시 불러오고 싶으면 다음의 명령어를 사용한다.

airflow dags backfill dag_id -s 2018-07-01 -e 2018-08-01

여기서 조금 주의해야할 점이 있다.

- dag 코드에 catchup = True 설정이 되어있어야 한다.

- execution_date를 사용해서 Incremental Update 코드로 구현되어 있어야한다.

- start_date(2018-07-01)는 포함하지만, end_date(2018-08-01)는 포함하지 않는다.

- 7월 1일, 7월 2일, 7월 3일처럼 순서대로 수행하지 않고 랜덤으로 수행한다.

- 날짜순으로 하고싶다면 default_args = {... 'depends_on_past' : True ... } 가 필요하다.

- Backfill은 max_active_runs = 1 인 상태로 하나씩 돌리는게 비교적 안전하다.

'TIL' 카테고리의 다른 글

| Python unittest를 Github Actions 기능으로 CI/CD 구현하기(with Docker) - TIL230613 (0) | 2023.06.14 |

|---|---|

| 간단한 Docker 이미지 만들어서 Docker hub에 넣고 실습해보기 - TIL230612 (0) | 2023.06.13 |

| Airflow Backfill - TIL230608 (0) | 2023.06.08 |

| Airflow ETL에서 Primary Key Uniqueness SQL로 보장하기 - TIL230608 (0) | 2023.06.08 |

| Yahoo finance API 사용해서 Airflow DAG 만들어보기 - TIL230607 (0) | 2023.06.08 |