| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

| 29 | 30 | 31 |

- Spark

- KDT_TIL

- etl

- Salting

- off heap memory

- spark executor memory

- redshift

- DataFrame Hint

- Kafka

- 빅데이터

- SQL

- colab

- Speculative Execution

- CI/CD

- mysql

- Spark Caching

- 데이터 파이프라인

- aws

- backfill

- Kubernetes

- topic

- k8s

- Airflow

- Spark 실습

- Spark Partitioning

- Docker

- Spark SQL

- Dag

- disk spill

- AQE

- Today

- Total

JUST DO IT!

Airflow DAG - TIL230607 본문

📚 KDT WEEK 10 DAY 3 TIL

- Airflow 예제 프로그램 포팅

- API DAG 작성하기

🟥 Hello World DAG 예제 프로그램

DAG 구조 알아보기

dag = DAG(

dag_id = 'HelloWorld', # DAG ID

start_date = datetime(2022,5,5), # 시작일

catchup=False, # 아래 설명 참고

tags=['example'], # DAG TAG

schedule = '0 2 * * *') # 분, 시, 일, 월, 요일 순으로 실행주기 설정 / 매일 2시마다 실행된다.

- max_active_runs : 한번에 동시에 실행될 수 있는 DAG 수 (Backfill할때 중요) (Worker에 할당된 CPU 총합이 최대)

- max_active_tasks : 이 DAG에 속한 Task가 한 번에 동시에 실행될 수 있는 Task 수

- catchup

- DAG를 실행 중이다가 다음 DAG를 실행할 시간을 넘겼을 때도 이전 DAG를 마치고 갈 것인지

- start_date가 현재보다 과거일 때 과거에 실행되지 못한 일까지 모두 실행할 것인지

PythonOperator를 사용해보자.

from airflow.operators.python import PythonOperator

# DAG 정의

dag = DAG(

dag_id = 'HelloWorld',

start_date = datetime(2022,5,5),

catchup=False,

tags=['example'],

schedule = '0 2 * * *'

)

# PythonOperator 정의

load_nps = PythonOperator(

dag=dag,

task_id='task_id', # id로 사용될 이름을 지정

python_callable=python_func, # task 실행 시 불러올 함수 지정

# 파라미터를 넘겨줄 수도 있다.

# dictionary 형태로 넘기면 된다.

params={

'table': 'delighted_nps',

'schema': 'raw_data'

},

)

# PythonOperator에서 불러올 함수

def python_func(**cxt):

# 넘겨준 파라미터를 불러올 때는 cxt["params"]["키"] 형태로 넘긴다.

table = cxt["params"]["table"]

schema = cxt["params"]["schema"]

ex_date = cxt["execution_date"]

# do what you need to do

PythonOperator를 통해 정의된 dag와 함수를 받아 불러오는 구조이다.

PythonOperator에서 params를 사전 형태로 지정하면, 함수에서 해당 값을 불러올 수 있다.

형태는 cxt["params"]["키"] 형태를 사용한다. (물론 cxt는 함수에서 받은 인자의 이름을 사용한다)

예제

from airflow import DAG

from airflow.operators.python import PythonOperator

from datetime import datetime

# DAG

dag = DAG(

dag_id = 'HelloWorld',

start_date = datetime(2022,5,5),

catchup=False,

tags=['example'],

schedule = '0 2 * * *')

# hello와 goodbye 두 개의 함수를 정의한다.

def print_hello():

print("hello!")

return "hello!"

def print_goodbye():

print("goodbye!")

return "goodbye!"

# 두 개의 함수를 사용할 PythonOperator를 정의한다.

print_hello = PythonOperator(

task_id = 'print_hello',

#python_callable param points to the function you want to run

python_callable = print_hello,

#dag param points to the DAG that this task is a part of

dag = dag)

print_goodbye = PythonOperator(

task_id = 'print_goodbye',

python_callable = print_goodbye,

dag = dag)

# DAG의 실행 순서를 명시한다.

# hello DAG 작업이 끝나야 goodbye DAG 작업이 실행될 것이다.

print_hello >> print_goodbye

Task Decorator

이번에는 Airflow에서 제공하는 Task Decorator를 사용해보자.

아까보다 구조가 더 간단해질 수 있다.

from airflow import DAG

from airflow.decorators import task

from datetime import datetime

@task

def print_hello():

print("hello!")

return "hello!"

@task

def print_goodbye():

print("goodbye!")

return "goodbye!"

with DAG(

dag_id = 'HelloWorld_v2',

start_date = datetime(2022,5,5),

catchup=False,

tags=['example'],

schedule = '0 2 * * *'

) as dag:

# Assign the tasks to the DAG in order

print_hello() >> print_goodbye()

@task Decorator를 사용할 함수 위에 정의해주면, 함수 자체를 task로 정의해주어 Operator 정의가 필요없어진다.

이때 밑에 DAG의 순서를 정의할 때는 함수의 이름을 사용하면 된다.

🟦 S3의 csv파일 ETL 예제 프로그램 Airflow 포팅

저번 글에서 Colab으로 ETL 실습했던 내용을 Airflow로 동작하도록 포팅해보자.

(링크)

저번 코드를 짧게 요약하자면,

S3에 저장된 name_gender.csv (이름, 성별로 구성)을 내 Redshift 테이블로 INSERT하는 코드였다.

이 ETL을 하나의 Task와 여러 개의 Task로 구성해서 포팅하는 방법을 각각 알아본다.

🔧 하나의 Task로 만들어보기

먼저 extract, transform, load를 하나의 Task로 구성해서 포팅해보자.

하나의 Task로 구성하기 때문에 하나의 PythonOperator를 사용하게 된다.

다음의 코드를 추가하면 된다.

def etl(**context):

link = context["params"]["url"] # PythonOperator의 params를 가져온다!

task_instance = context['task_instance'] # Task의 정보가 넘어간다.

execution_date = context['execution_date'] # 스케쥴링에 관련된 데이터로, 다음에 배운다.

logging.info(execution_date)

# 여기서 extract, transform, load가 묶여 하나의 Task처럼 동작하게 된다.

data = extract(link)

lines = transform(data)

load(lines)

dag = DAG(

dag_id = 'name_gender_v2',

start_date = datetime(2023,4,6), # 날짜가 미래인 경우 실행이 안됨

schedule = '0 2 * * *', # 매일 2시마다 실행

catchup = False,

max_active_runs = 1, # 한 번에 여러 개가 동작하지 않도록

default_args = {

'retries': 1,

'retry_delay': timedelta(minutes=3),

}

)

task = PythonOperator(

task_id = 'perform_etl',

python_callable = etl, # 하나의 Task로 묶은 함수를 불러온다.

params = {

'url': "S3 주소를 입력"

},

dag = dag)extract에서 사용한 context['params']['url']의 경우, PythonOperator에서 정의된 파라미터를 가져오게 된다.

이 부분은 윗 글에서 설명했었다.

Extract, Transform, Load가 동작할 하나의 함수 etl을 만들어 PythonOperator 함수에 넣는다.

dag도 윗 글에 설명했던 것처럼 실행 주기 등등을 정해서 넣어주자.

전체 코드

from airflow import DAG

from airflow.operators.python import PythonOperator

from airflow.models import Variable

from datetime import datetime

from datetime import timedelta

import requests

import logging

import psycopg2

def get_Redshift_connection():

host = "redshift 엔드포인트 주소"

redshift_user = "ID"

redshift_pass = "PASSWORD"

port = 5439 # Redshift Port

dbname = "DB이름"

conn = psycopg2.connect(f"dbname={dbname} user={redshift_user} host={host} password={redshift_pass} port={port}")

conn.set_session(autocommit=True)

return conn.cursor()

def extract(url):

logging.info("Extract started")

f = requests.get(url)

logging.info("Extract done")

return (f.text)

def transform(text):

logging.info("Transform started")

lines = text.strip().split("\n")[1:] # 첫 번째 라인을 제외하고 처리

records = []

for l in lines:

(name, gender) = l.split(",") # CSV 파일

records.append([name, gender])

logging.info("Transform ended")

return records

def load(records):

logging.info("load started")

schema = "Redshift 스키마이름"

cur = get_Redshift_connection()

try:

cur.execute("BEGIN;")

cur.execute(f"DELETE FROM {schema}.name_gender;") # 해당 스키마의 name_gender 테이블 내용 제거

for r in records:

name = r[0]

gender = r[1]

print(name, "-", gender)

sql = f"INSERT INTO {schema}.name_gender VALUES ('{name}', '{gender}')" # 해당 스키마의 name_gender 테이블에 삽입

cur.execute(sql)

cur.execute("COMMIT;") # cur.execute("END;")

except (Exception, psycopg2.DatabaseError) as error:

print(error)

cur.execute("ROLLBACK;")

logging.info("load done")

def etl(**context):

link = context["params"]["url"]

task_instance = context['task_instance']

execution_date = context['execution_date']

logging.info(execution_date)

data = extract(link)

lines = transform(data)

load(lines)

dag = DAG(

dag_id = 'name_gender_v2',

start_date = datetime(2023,4,6),

schedule = '0 2 * * *',

catchup = False,

max_active_runs = 1,

default_args = {

'retries': 1,

'retry_delay': timedelta(minutes=3),

}

)

task = PythonOperator(

task_id = 'perform_etl',

python_callable = etl,

params = {

'url': "S3 CSV파일 주소"

},

dag = dag)

🛠️ 여러 개의 Task로 구성해보기

이번에는 extract, transform, load를 각각의 Task로 구성하여 동작하도록 구현해보자.

여기서는 각 Task가 값을 어떻게 주고 받을지가 중요하다.

Airflow에서는 그 값을 주고 받는 방식으로 Xcom을 사용한다.

Xcom

- Task(Operator)들간에 데이터를 주고 받기 위한 방식

- 보통 한 Operator의 리턴값을 다른 Operator에서 읽는 형태

- 이 데이터들은 Airflow 메타 데이터 DB에 저장되므로 큰 데이터를 주고 받기에는 어려움

- 큰 데이터의 경우 S3 등에 로드해서 그 위치를 받는게 일반적이다.

task 정보로부터 xcom_pull을 불러오는 방식을 사용한다.

context["task_instance"].xcom_pull(key="return_value", task_ids="transform")

이 코드를 사용하면, task id가 transform인 함수의 return 값을 가져올 수 있다.

여러 개의 Task로 구성한 경우 앞의 코드에서 변경된 각각의 함수와 PythonOperator를 확인해보자.

# 모두 인자로 context를 받게 되었다.

def extract(**context):

link = context["params"]["url"]

task_instance = context['task_instance']

execution_date = context['execution_date']

logging.info(execution_date)

f = requests.get(link)

return (f.text)

def transform(**context):

logging.info("Transform started")

# extract의 리턴 값을 받기 위해 Xcom을 사용한다.

text = context["task_instance"].xcom_pull(key="return_value", task_ids="extract")

lines = text.strip().split("\n")[1:]

records = []

for l in lines:

(name, gender) = l.split(",") # CSV 파일

records.append([name, gender])

logging.info("Transform ended")

return records

def load(**context):

logging.info("load started")

#PythonOperator에서 정의된 shema와 table 값을 가져온다.

schema = context["params"]["schema"]

table = context["params"]["table"]

# transform 함수의 리턴 값을 Xcom으로 가져온다.

lines = context["task_instance"].xcom_pull(key="return_value", task_ids="transform")

cur = get_Redshift_connection()

try:

cur.execute("BEGIN;")

cur.execute(f"DELETE FROM {schema}.name_gender;")

for r in records:

name = r[0]

gender = r[1]

print(name, "-", gender)

sql = f"INSERT INTO {schema}.name_gender VALUES ('{name}', '{gender}')"

cur.execute(sql)

cur.execute("COMMIT;") # cur.execute("END;")

except (Exception, psycopg2.DatabaseError) as error:

print(error)

cur.execute("ROLLBACK;")

logging.info("load done")

extract = PythonOperator(

task_id = 'extract',

python_callable = extract,

params = {

'url': Variable.get("csv_url") # 이 부분은 아래에서 바로 설명한다!

},

dag = dag)

transform = PythonOperator(

task_id = 'transform',

python_callable = transform,

params = {

},

dag = dag)

load = PythonOperator(

task_id = 'load',

python_callable = load,

params = {

'schema': 'Redshift 스키마이름',

'table': 'name_gender' # name_gender라는 이름의 테이블에 저장한다.

},

dag = dag)

extract >> transform >> load # DAG의 순서를 명시한다.PythonOperator에서 사용한 dag는 아까 전과 같아서 생략했다.

필요한 값을 각자의 PythonOperator의 params를 통해 전달받고, Xcom을 사용해서 전 DAG의 리턴 값을 전달받는다.

Variable.get("csv_url")의 경우, 외부에서 Airflow에 저장된 정보를 불러온 것으로, 바로 밑에서 설명하겠다.

🟩 Airflow에서 민감한 정보 관리하기

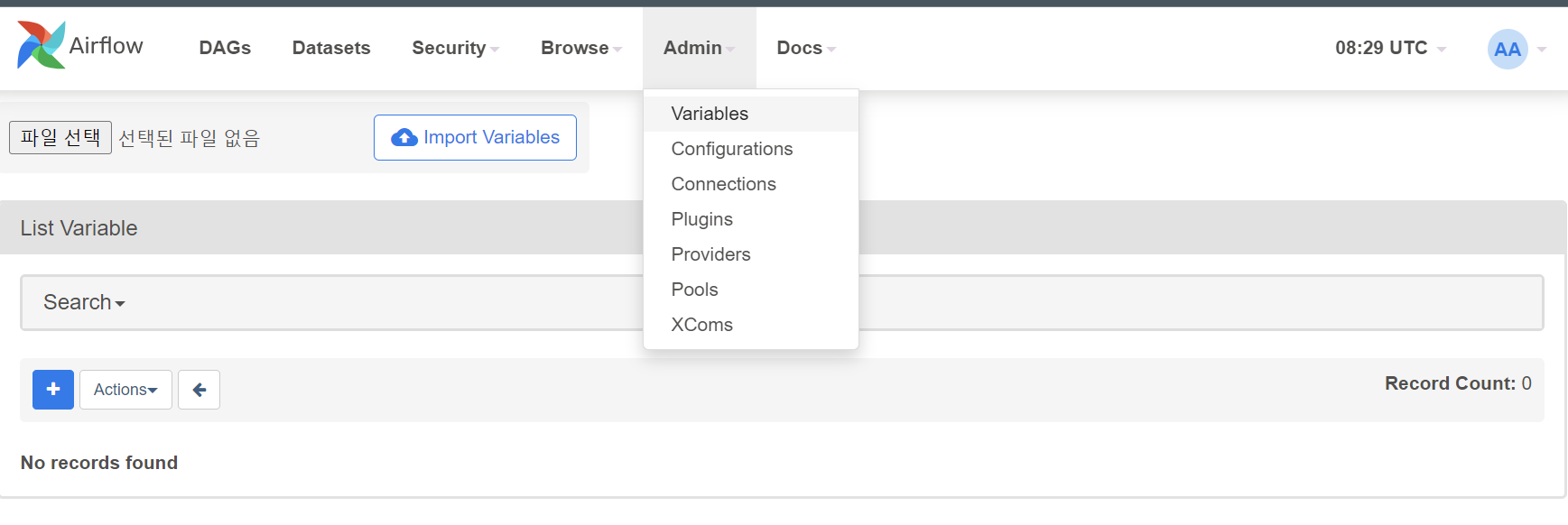

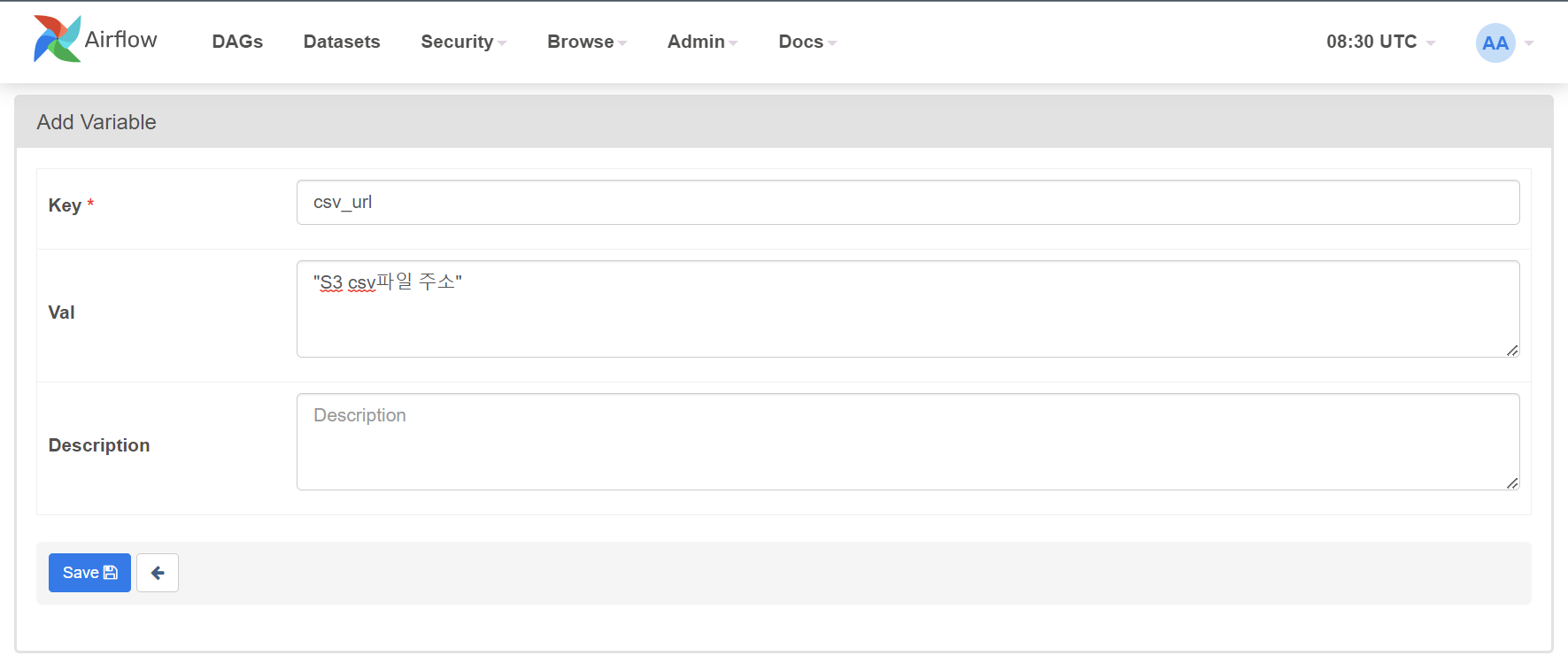

extract의 PythonOperator에서 사용한 Variable.get("csv_url")은 Airflow의 Variable에 정의된 url을 가져온 것이다.

아래의 Airflow 웹 UI에서 Admin > Variables > + 버튼을 통해 Variables를 추가하면 된다.

이처럼 Airflow에서는 Variable을 따로 구성하고 저장하면, 불러와서 사용할 수 있다.

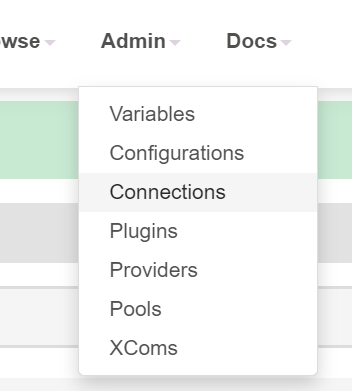

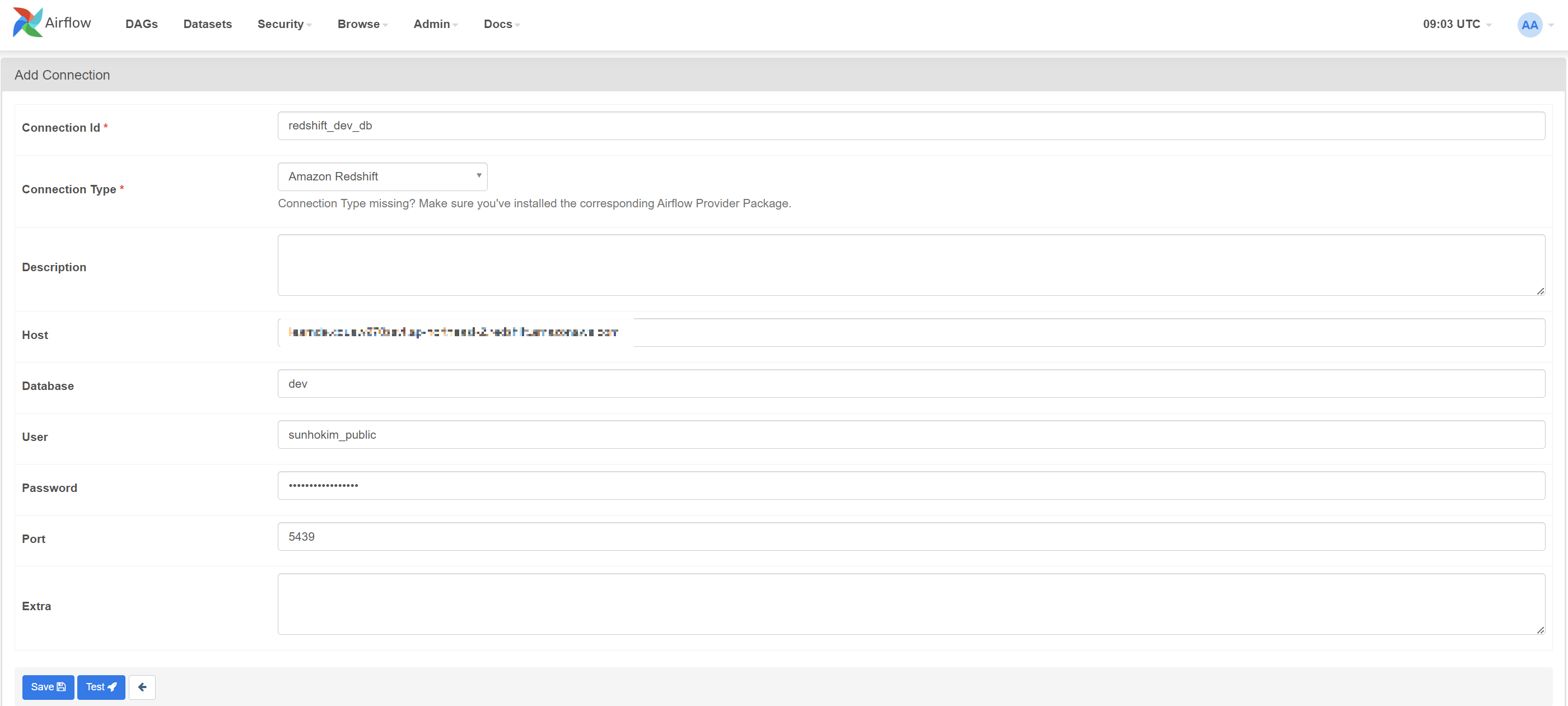

이번에는 Redshift Connection 정보를 Airflow에 넣어보자.

사용할 때는 Connection id로 입력했던 정보를 사용하면 바로 사용할 수 있다.

+

Airflow 진짜 실행

airflow-setup/dags 폴더에 해당 py 파일 넣기

웹UI에서 확인 (도커로 재실행필요)

Variable 값이 잘 저장되어있어야 DAG Import 오류가 없음

기타 QnA

여러 개의 Task와 하나의 Task 장단점?

하나의 Task --> 오래 걸리는 Task의 경우 처음부터 다시 실행해야 하지만, 여러 개로 나누면 실패한 부분부터 실행하면됨.

하지만 Task를 너무 많이 만들면 DAG가 실행되는데 오래 걸리고 스케줄러에 부하가 감

적당히 나누는게 필요.

PostgresHook의 autocommit Default는 False로, 이때는 BEGIN 의미x

Airflow의 variable 관리

airflow.cfg (airflow 환경설정)

DAGs 폴더는 어디에 지정되는가? 지정되는 키가 무엇인가.

DAGs 폴더에 새로운 Dag를 만들면 언제 실제로 Airflow 시스템에서 알게 되나? > 5분

이 스캔 주기를 결정해주는 키의 이름은?

Airflow를 API형태로 외부에서 조작하려면 어느 섹션을 변경해야하는가

Variable의 변수의 값이 암호화되려면 변수의 이름에 어떤 단어가 들어가야 할까?

이 환경설정 파일을 실제로 반영하려면 필요한 일이 있음. 무엇인가?

Metadata DB 내용을 암호화하는데 사용되는 키는? (MySQL, PostgreSQL를 암호화하는 키?)

'TIL' 카테고리의 다른 글

| Airflow ETL에서 Primary Key Uniqueness SQL로 보장하기 - TIL230608 (0) | 2023.06.08 |

|---|---|

| Yahoo finance API 사용해서 Airflow DAG 만들어보기 - TIL230607 (0) | 2023.06.08 |

| Redshift를 Superset에 연동하고 대시보드 만들기 - TIL230526 (0) | 2023.05.26 |

| Snowflake 알아보고, S3에서 COPY해보기 - TIL230525 (0) | 2023.05.25 |

| Redshift 고급 기능 -TIL230524 (1) | 2023.05.24 |